1) AI is a technology that should have careful and thoughtful implications and, by itself, is not inherently harmful.

2) Hobbyists currently implementing AI in software haven't considered any of these ethical implications in their work.

3) The real danger of AI is not an evil super-genius AI, but in the reflection of the mental and emotional flaws of the creators.

4) The reason AI will succeed is because normies have a lower threshold for quality and will eat anything as long as they can continue consuming.

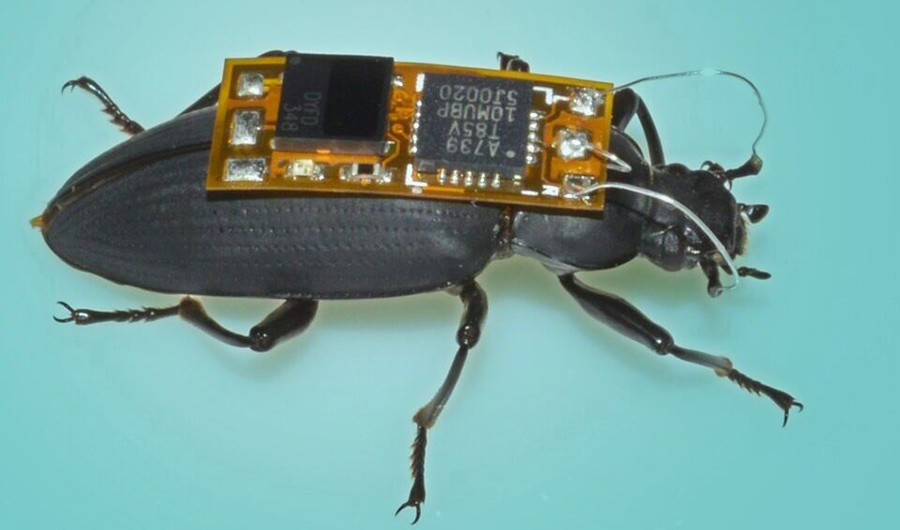

I'm seeing some concerning trends cropping up with AI. It started with art theft through "AI art programs" which just montaging someone else's perspective on an artistic piece. Now we have really obvious "please train our AI" captcha content for more emotional/human content than just detecting traffic crossings.

Here are a few I saw this week.

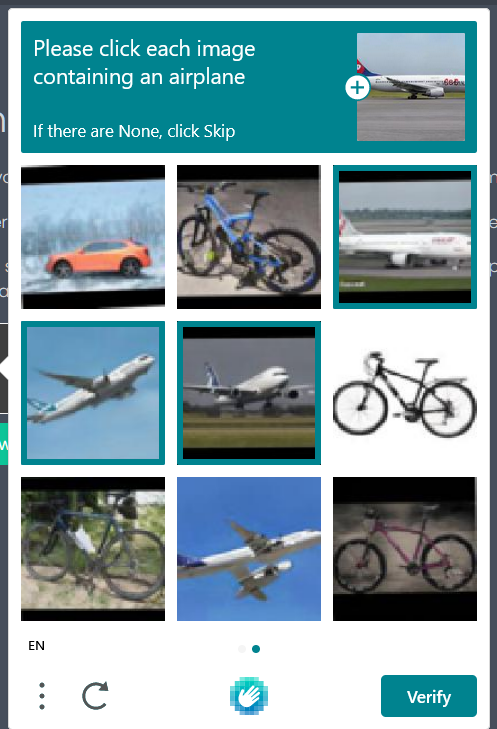

Image 8 is an obviously AI generated image of a plane (note that they're getting better at bikes):

9 is another AI generated image of a plane with two rear ends:

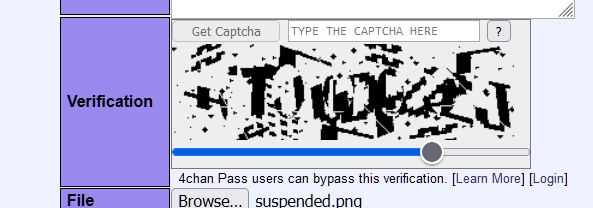

It's been a while, but I tried to post to 4chan this week and was met with this. Needless to say I didn't post to 4chan:

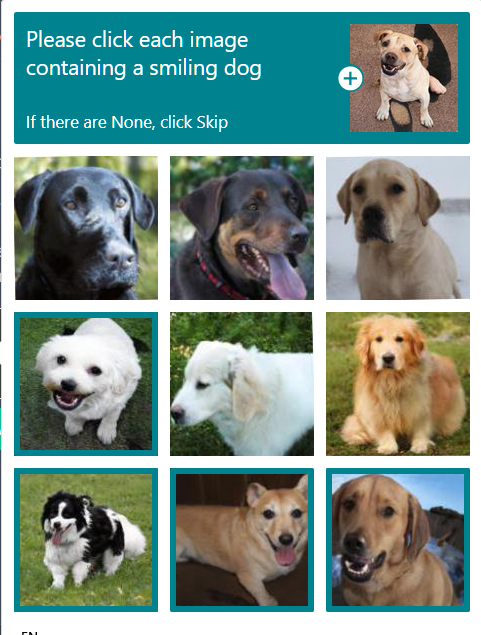

This one really pissed me off. I'm not a dog person, and I believe it's wrong to project and personify them. With this in mind, please find the smiling dogs:

You'll be interested to note that you do not have to be correct on 100% of the images to pass the verification, so I aim for 50% to blur the resulting data as best I can. In other places I make a habit of really fucking up my alt text so it's human readable but useless for machine learning. Next time you get an opportunity, please describe a photo of your cat as an important military target in Syria.

Source of the captchas: hCaptcha verification.

Death to robots.

2) Hobbyists currently implementing AI in software haven't considered any of these ethical implications in their work.

3) The real danger of AI is not an evil super-genius AI, but in the reflection of the mental and emotional flaws of the creators.

4) The reason AI will succeed is because normies have a lower threshold for quality and will eat anything as long as they can continue consuming.

I'm seeing some concerning trends cropping up with AI. It started with art theft through "AI art programs" which just montaging someone else's perspective on an artistic piece. Now we have really obvious "please train our AI" captcha content for more emotional/human content than just detecting traffic crossings.

Here are a few I saw this week.

Image 8 is an obviously AI generated image of a plane (note that they're getting better at bikes):

9 is another AI generated image of a plane with two rear ends:

It's been a while, but I tried to post to 4chan this week and was met with this. Needless to say I didn't post to 4chan:

This one really pissed me off. I'm not a dog person, and I believe it's wrong to project and personify them. With this in mind, please find the smiling dogs:

You'll be interested to note that you do not have to be correct on 100% of the images to pass the verification, so I aim for 50% to blur the resulting data as best I can. In other places I make a habit of really fucking up my alt text so it's human readable but useless for machine learning. Next time you get an opportunity, please describe a photo of your cat as an important military target in Syria.

Source of the captchas: hCaptcha verification.

Death to robots.

Virtual Cafe Awards

) only to repeat the cycle. It's been a long time since one of those collapses though, and maybe it's time for a new giant.

) only to repeat the cycle. It's been a long time since one of those collapses though, and maybe it's time for a new giant.